Authenticity & AI Detection

February 17, 2026

Image Authenticity Verification Methods: What Works Today

⇥ QUICK READ

<div class="hs-cta-embed hs-cta-simple-placeholder hs-cta-embed-116492913348"

style="max-width:100%; max-height:100%; width:170px;height:520px" data-hubspot-wrapper-cta-id="116492913348">

<a href="https://cta-eu1.hubspot.com/web-interactives/public/v1/track/redirect?encryptedPayload=AVxigLLzYHGtMYDgEXfYVUgSiXwccJLh5h3CwupigPP41t9C%2F%2FVGLQzQo6QfRZvkRixD3Gg8ORSe2kvWQLaQzNRj9oZIrDcCMVB4toZwj2GQNTwKFbP2xz0s6ZR7VIxerClVpKKlA56vLkY0Y6X9egfFZQbDbpD6n%2B2d3BdOYnLSFlg5cgNHHA%2FlABqhYebal4LFvJL9LUdJW%2B8x53h%2F7XQlGL9Y5bu%2BdF%2FA5MM6xEWx4QL3aw%3D%3D&webInteractiveContentId=116492913348&portalId=4144962" target="_blank" rel="noopener" crossorigin="anonymous">

<img alt="Do you know which laws apply to you?" loading="lazy" src="https://hubspot-no-cache-eu1-prod.s3.amazonaws.com/cta/default/4144962/interactive-116492913348.png" style="height: 100%; width: 100%; object-fit: fill"

onerror="this.style.display='none'" />

</a>

</div>

Share this article

Subscribe to the blog!

I subscribeImage authenticity verification has moved from a niche technical concern to a practical necessity. AI-generated and manipulated images now circulate alongside real ones, often without context, and organizations are increasingly asked to defend why a particular image should be trusted at all.

Visual inspection and metadata alone are not sufficient to establish trust. Verifying where an image comes from, whether it has been altered, and how it has been used now requires more robust and resilient approaches.

Why Verifying Image Authenticity Has Become So Difficult

The difficulty of image authenticity verification is not driven by a single technological shift, but by a combination of scale, speed, and transformation.

Images are created and shared at unprecedented volume. They are instantly copied, compressed, cropped, reformatted, and reposted across platforms that routinely strip contextual information. In this environment, verification methods designed for static files or controlled workflows quickly break down.

Authenticity is also no longer a simple question of “real versus fake.” What matters today is the ability to prove origin, integrity, and history. Who created the image? Under what conditions? Has it been modified, and if so, how? These questions require evidence, not inference.

At the same time, regulatory, journalistic, and corporate pressures are turning authenticity into an operational requirement. Newsrooms must defend the legitimacy of published images. Brands must respond quickly to visual misinformation. Regulators increasingly expect transparency around synthetic and non-synthetic content. In all cases, authenticity must be demonstrable, not assumed.

The Main Image Authenticity Verification Methods Explained

A wide range of image authenticity verification methods are used today. Each plays a role, but each also has structural limits that matter in real-world conditions.

a) Contextual & Investigative Methods (Human-Led)

Contextual and investigative methods rely primarily on human analysis.

Reverse image search is commonly used to trace image reuse, identify earlier appearances, or detect when visuals are taken out of context. Visual and forensic inspection focuses on cues such as inconsistent lighting, unnatural shadows, cloning artifacts, or splicing edges that may indicate manipulation.

These approaches are valuable in journalism, research, and investigations. However, they are manual, subjective, and difficult to scale. They also rely on the existence of comparable reference images and on human interpretation, which limits their reliability under time pressure or at high volume.

b) File-Based & Analytical Methods (Data-Dependent)

File-based methods analyze data attached to the image itself.

Metadata analysis examines information such as capture device, timestamps, geolocation, or editing software. Error Level Analysis (ELA) and compression artifact analysis look for localized inconsistencies that may reveal editing or recompression.

These techniques can be effective in controlled environments, particularly when original files are available. However, they are fragile by design. Metadata is frequently stripped by platforms and workflows, while compression and format conversion can invalidate analytical signals. Once an image circulates, these methods often lose their evidentiary value.

c) Automated Detection Methods (Probabilistic)

Automated detection relies on machine learning models trained to recognize patterns associated with AI-generated or manipulated images.

These systems are useful for large-scale screening and prioritization. They can help flag suspicious content or estimate whether an image is likely synthetic.

However, they remain probabilistic. They infer likelihood rather than confirm origin. Their performance depends on training data and model updates, making them vulnerable to model drift and adversarial adaptation. As a result, they are ill-suited for high-stakes verification where certainty is required.

d) Embedded Provenance Methods (Verifiable)

Embedded provenance methods take a fundamentally different approach.

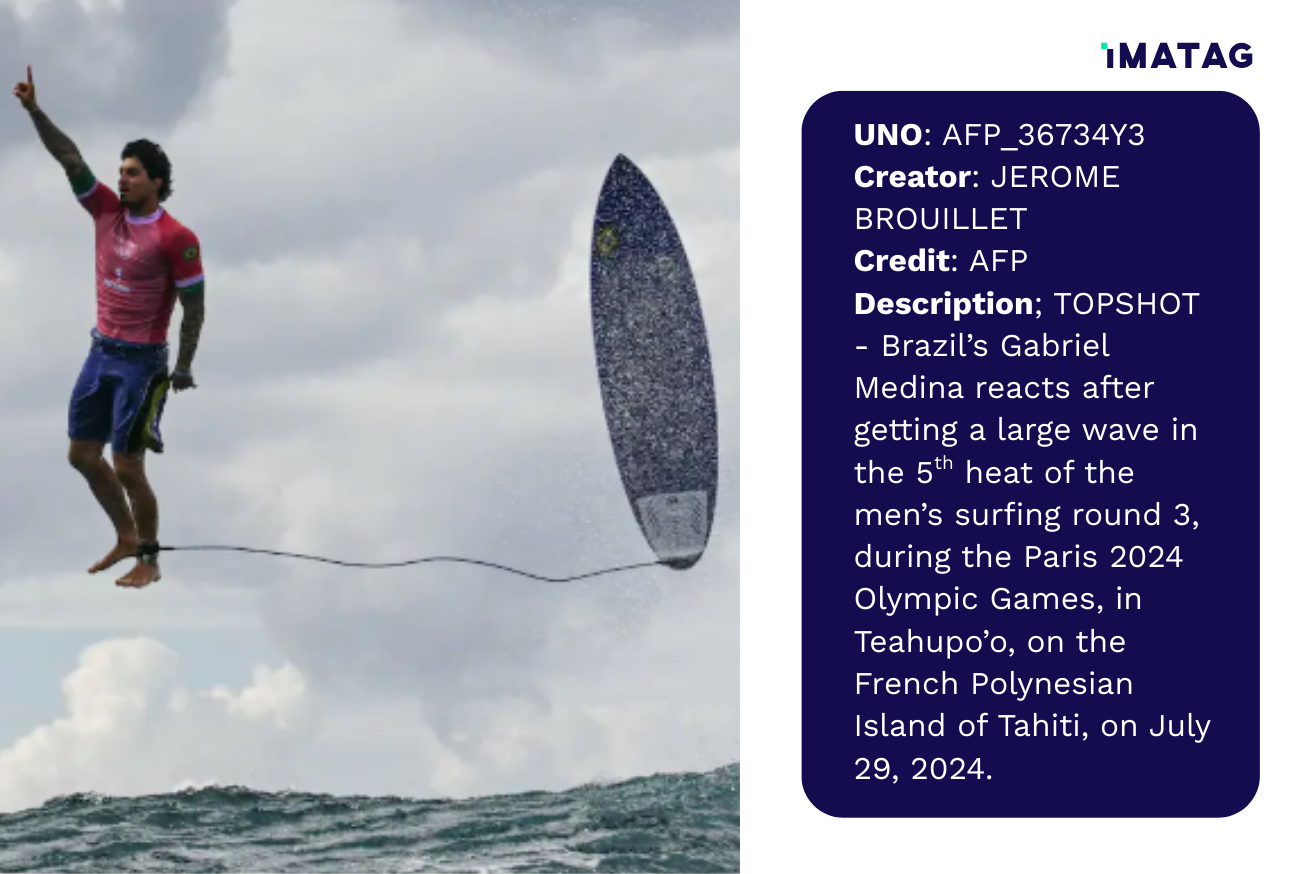

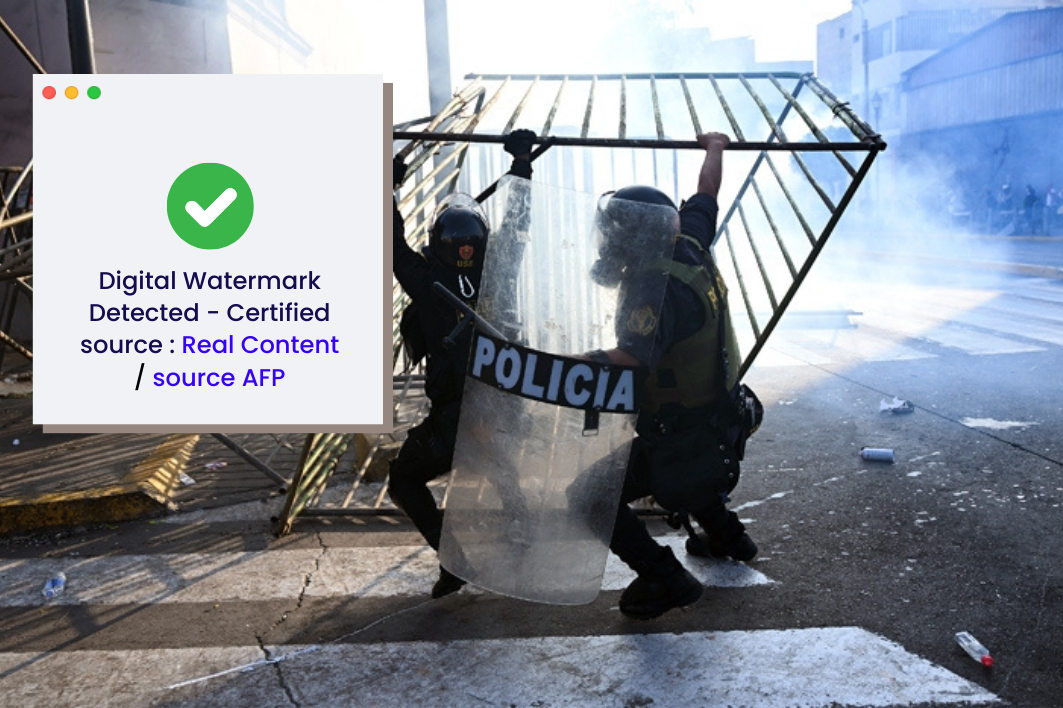

Digital watermarking embeds authenticity signals directly into the pixels of the image, allowing the content itself to carry verifiable information. Provenance frameworks such as C2PA record structured metadata about origin and transformations to establish a transparent history of the asset.

While metadata provides rich contextual information, it can be removed or altered during normal file handling. Embedded watermarking addresses this limitation by binding authenticity signals to the image at the pixel level. In advanced implementations, watermarking can also act as a persistent link to external provenance data, ensuring that even if metadata is stripped, the connection to original information can be re-established.

These methods are designed for verification at scale rather than visual inspection. Their effectiveness depends on persistence, detectability, and the ability to maintain a reliable link between the image and its provenance data across real-world transformations.

When Image Verification Fails

Most image authenticity verification methods fail not at the moment of creation, but after images leave their original environment.

Once images are uploaded to platforms, compressed, cropped, screenshotted, or converted between formats, many verification signals disappear. Metadata can be stripped. Analytical artifacts can be altered. Detection confidence can drop below usable thresholds.

At this stage, the decisive factor is not the method itself, but where the authenticity signal resides. Some approaches rely on inference, estimating authenticity based on patterns or probabilities. Others depend on data attached to the file, which can be removed through routine processing. Only signals embedded directly into the image persist reliably as the content is shared and transformed.

When verification fails, the consequences are concrete.

Legal risk arises when organizations cannot prove image origin or integrity in disputes, investigations, or regulatory contexts. Reputational risk follows when authentic images are challenged as fake, or when manipulated visuals circulate under a trusted brand’s name. Operational risk increases as verification teams are forced into slow, manual checks during crises or misinformation events. Compliance risk grows as emerging AI and media regulations demand stronger transparency and provenance guarantees.

At the moment proof is required most, weak verification leaves only uncertainty.

When Image Authenticity Methods Must Work Together

No single image authenticity verification method solves the problem alone.

Contextual analysis provides understanding. Detection tools enable scale. Provenance standards structure detailed information about origin, authorship and modification history. The challenge is ensuring that these layers remain connected after images circulate.

Metadata and provenance manifests can describe how content was created, including capture conditions, editing history, or even generative parameters. However, if that information becomes detached from the image file, its evidentiary value is reduced.

This is where embedded approaches play a critical role. Digital watermarking can anchor provenance frameworks such as C2PA at the pixel level, creating a persistent bridge between image and its associated metadata. Instead of replacing metadata, embedded watermarking strengthens it, ensuring that authenticity signals survive compression, sharing and reformatting.

Embedding verification at capture or production time fundamentally changes the trust equation. Authenticity is no longer something reconstructed after circulation, it is carried by the image itself, wherever it goes.

Building Trust That Survives Circulation

In practice, image authenticity verification methods are only as strong as their weakest point of failure. Images are edited, shared, transformed, and repurposed in ways that most verification approaches were never designed to withstand. In this environment, trust cannot rely on inspection alone.

This is why the image ecosystem is gradually moving away from detection and interpretation, and toward embedded authenticity and verifiable provenance. When proof is carried by the image itself, verification becomes possible even after circulation, compression, or reuse.

Imatag’s approach to authenticity is built on this principle. By embedding robust, invisible watermarks directly into visual content, Imatag enables authenticity to persist beyond platforms, formats, and workflows. Provenance and verification are no longer dependent on intact metadata or contextual reconstruction, but remain bound to the image wherever it travels.

Want to "see" an Invisible Watermark?

Learn more about IMATAG's solution to insert invisible watermarks in your visual content.

Book a demoThese articles may also interest you

Authenticity & AI Detection

January 16, 2026

CES 2026: What the AI Hype Still Misses About Image Authenticity

Authenticity & AI Detection

December 16, 2025

Detecting AI-Generated Images: Why Robust Watermarking Standards Matter

Authenticity & AI Detection

July 8, 2025