Authenticity & AI Detection

December 16, 2025

Detecting AI-Generated Images: Why Robust Watermarking Standards Matter

⇥ QUICK READ

<div class="hs-cta-embed hs-cta-simple-placeholder hs-cta-embed-116492913348"

style="max-width:100%; max-height:100%; width:170px;height:520px" data-hubspot-wrapper-cta-id="116492913348">

<a href="https://cta-eu1.hubspot.com/web-interactives/public/v1/track/redirect?encryptedPayload=AVxigLIgeE%2BZBJ%2BhrXtxqKYlCc2JGwyKAhpz1HAC66Q%2FH6hLZuT2EzO1Jr01oyQkVFe%2FG%2BcZ%2Bfa8aQ%2FMDds9uD5rsDbIJOaGE%2F6gzgr1ysPKs1eB3Gc5oa4NJLwTLHuThiSYgxOREc%2By8pcmjt3d5TFjgiqcOiJLMEDKDmVWZtjyaVxbzSAYSF2iR2k5S%2B%2BK5fnFTymlsTEP%2FVdETNjIbAAjDVdq2cY9gUUZo0AWvjaC3CnG9Q%3D%3D&webInteractiveContentId=116492913348&portalId=4144962" target="_blank" rel="noopener" crossorigin="anonymous">

<img alt="Do you know which laws apply to you?" loading="lazy" src="https://hubspot-no-cache-eu1-prod.s3.amazonaws.com/cta/default/4144962/interactive-116492913348.png" style="height: 100%; width: 100%; object-fit: fill"

onerror="this.style.display='none'" />

</a>

</div>

Share this article

Subscribe to the blog!

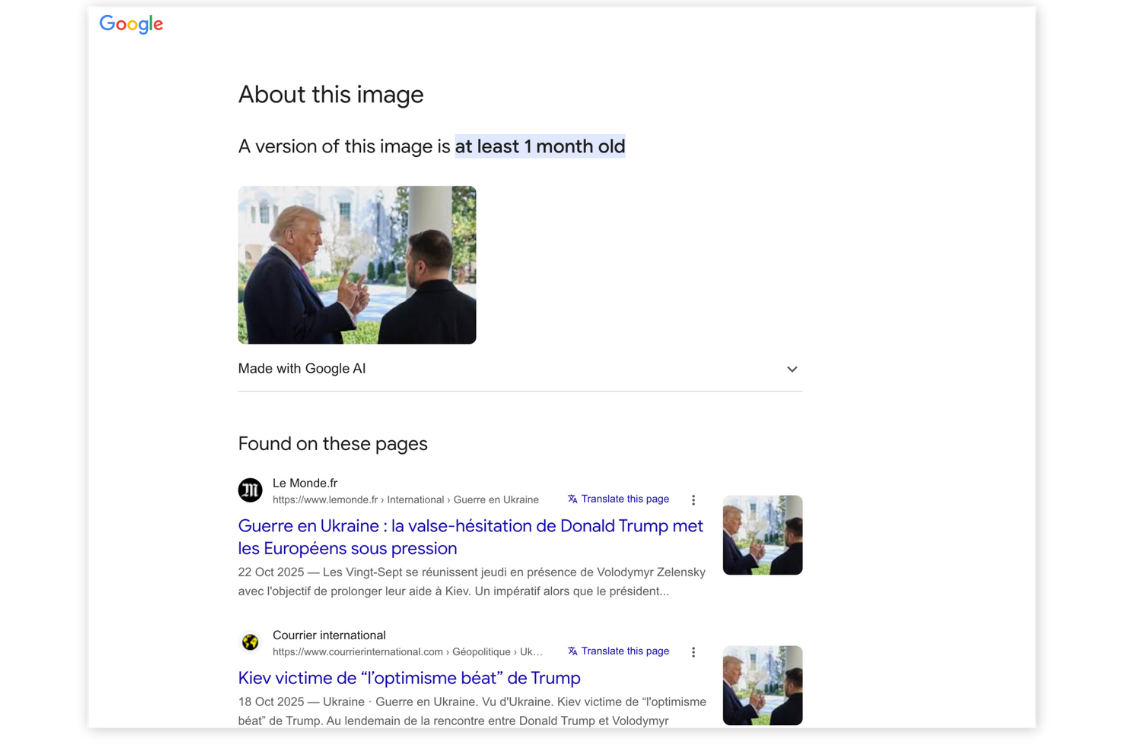

I subscribeAI-generated images have become ubiquitous across news feeds, creative tools, and social platforms. As they circulate freely, questions arise about authenticity, traceability, and compliance with emerging regulations. Invisible watermarking has emerged as one of the most promising approaches to verify the origin of digital images at scale.

Advances in Invisible Watermarking

Invisible watermarks embed a hidden digital signature directly into an image. While imperceptible to the eye, they can be detected by automated tools to indicate that content was created by an AI system.

Industry investment in this space is accelerating. Efforts like Google’s SynthID show clear momentum and a willingness to set higher standards for safety, transparency, and regulatory alignment, especially as frameworks such as the EU AI Act and California AI Transparency Act require clearer disclosure of AI-generated media.

As a result, watermarking is establishing itself as a foundational layer for authenticity verification, media trust and provenance, and adherence to upcoming transparency obligations.

The Challenge: Detecting AI-Generated Content

Despite progress, detection remains a highly technical problem. Invisible watermarks must survive all the transformations that images undergo in the real world, whether intentional or not. Even simple actions such as cropping, resizing, adjusting brightness, or modifying the background can alter pixel distributions enough to weaken a watermark.

Large crops, in particular, pose a challenge. When more than 50% of an image is removed, many watermarking methods lose a significant portion of their signal. Content-aware edits (removing an object, regenerating part of a scene, or making local semantic adjustments) can also disrupt the regions where the watermark is embedded.

Recent academic work, including the UnMarker attack from the University of Waterloo, has shown that subtle frequency-based transformations can significantly weaken or erase watermarks across many methods without introducing visible artifacts.

And beyond removal, the inverse threat must be considered: if a watermark can be artificially added to a real photograph, the entire verification system becomes unreliable. These challenges are not specific to any one solution, they reflect the fundamental complexity of watermarking itself.

Why Detection Certainty Matters

People with malicious intent have discovered that it is easy to get around the disclosure rules. Some simply ignore them. Others manipulate the videos to remove the identifying watermarks. The New York Times found dozens of examples of Sora videos appearing on YouTube without the automated label.

Several companies have sprung up offering to remove logos and watermarks. And editing or sharing videos can wind up removing the metadata embedded in the original video indicating it was made with A.I.

This erosion of provenance signals comes at a time when audience expectations are moving in the opposite direction. “Viewers increasingly want more transparency about whether the content they’re seeing is altered or synthetic,” said Jack Malon, a YouTube spokesman.

In this context, the consequences of imperfect detection are not theoretical. A slightly cropped or filtered image can mislead millions if its watermark becomes undetectable. A deepfake can circulate during an election cycle without being recognized as synthetic. Authentic creators may find themselves incorrectly flagged as producing AI content, while actual AI-generated visuals evade identification in sensitive contexts such as journalism or legal investigations.

In these scenarios, “mostly reliable” isn’t enough. Watermarking must operate reliably under the messy, unpredictable conditions of real-world image circulation.

Imatag’s Standard for Ultra-Robust Watermarking

Imatag’s approach focuses on achieving a level of reliability suitable for high-stakes verification.

A central requirement is an extremely low false-positive rate. Our detection threshold is calibrated to approximately 1 in 1,000,000,000,000 for a positive identification. Recent internal research has reinforced how critical strict thresholds are, as some watermarking methods can produce occasional false positives under certain conditions.

Another key principle is semantic binding: the watermark should remain detectable within the core meaning of the image, even if peripheral or stylistic elements change. This is complemented by resistance to routine edits such as cropping, flipping, or brightness adjustment, operations that can occur naturally as images are shared.

To support trust and accountability, the system is evaluated through extensive stress tests and transparent benchmarks. And to ensure compatibility across the ecosystem, Imatag’s watermarking aligns with emerging provenance frameworks such as C2PA.

Building a Stronger Industry Standard

Progress from major actors, including initiatives like SynthID, shows how quickly the field is advancing and how seriously industry leaders take the challenge of provenance and safety. Yet the challenge of robust detection is shared by everyone. Watermarking will only fulfill its promise if it meets strong, verifiable standards capable of withstanding everyday transformations and adversarial manipulation.

Moving forward, collaboration will be essential. Stronger, shared standards benefit everyone: platforms, creators, researchers, regulators, and the public. Invisible watermarking is set to become a foundational layer of trust, yet its long-term success depends on meeting standards that are not only ambitious but also verifiable and widely tested.

Want to "see" an Invisible Watermark?

Learn more about IMATAG's solution to insert invisible watermarks in your visual content.

Book a demoThese articles may also interest you

Authenticity & AI Detection

January 16, 2026

CES 2026: What the AI Hype Still Misses About Image Authenticity

Authenticity & AI Detection

July 8, 2025

Digital Watermarking Security: The Open-Source Trap That Could Cost You Everything

Authenticity & AI Detection

May 21, 2025